Building a Prototype of the Cosmos

Supercomputers offer a sneak peek at what we’ll be able to see through the next US-funded telescopes.

Building a Prototype of the Cosmos

Supercomputers offer a sneak peek at what we’ll be able to see through the next US-funded telescopes.

DURHAM, N.C. -- How would it feel to peer into the night sky and behold millions of galaxies across a vast swath of space? What would it be like to hunt for worlds beyond our solar system, or spot the fiery deaths of stars?

In the next few years, two U.S.-funded telescopes will allow astronomers to find out. But before that happens, a Duke researcher has been leading an effort, under a broader project called OpenUniverse, to create the most realistic preview yet of what they will see once the missions get underway.

In his office on Duke’s West Campus, Duke physics professor Michael Troxel offers a sneak peek at what we can expect from the Vera C. Rubin Observatory when it starts operating in 2025, and NASA’s Nancy Grace Roman Space Telescope when it launches by 2027.

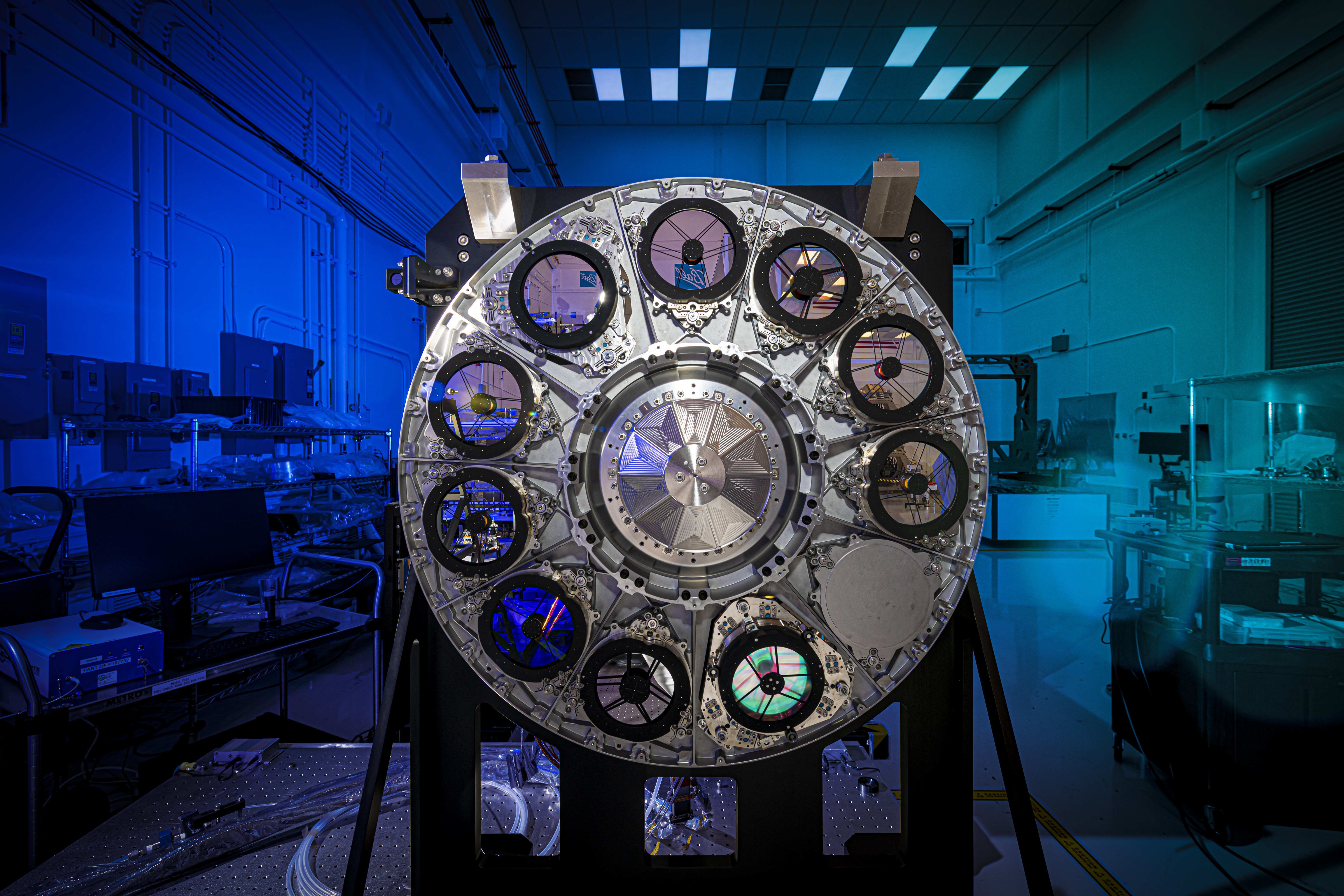

Jointly funded by the National Science Foundation and the Department of Energy, the Rubin Observatory will use a giant 8.4-meter telescope perched on a Chilean mountaintop to take data over nearly half of the entire sky.

At the same time, from its space orbit a million miles from Earth, NASA’s Roman telescope will look close to the edge of the observable universe to unveil faint and faraway objects in crisp detail.

By pairing the telescopes’ observations, scientists hope to better understand longstanding mysteries such as why the universe seems to be expanding at an ever-faster rate.

Combining the two datasets, however, will present unique technical challenges.

That’s because once they’re up and running, the two telescopes will produce unprecedented amounts of data, Troxel says. Astronomers anticipate a combined total of 80 petabytes in the lifetimes of these instruments. That’s three times the digital footprint of the U.S. Library of Congress.

To help researchers prepare for this data deluge, Troxel is leading an effort to essentially reconstruct what these telescopes will see, galaxy by galaxy, as close as possible to the actual data to allow scientists to explore and sift through it the way they could with the real thing.

With a few clicks of a mouse, he pulls up a simulated image of what the data combined together reveals in a single tiny patch of sky.

It isn’t much real estate in space. Ten such images would barely cover the full moon. The actual surveys will be upwards of tens of thousands of times larger. Nevertheless, the image contains some 80,000 galaxies and other objects.

Some of them are so faint and far away -- up to 20 billion light years -- that they’re “hard to tell from a speck of dust on my screen,” Troxel says.

Every dot or speck of light represents a distant galaxy. Instead of vast clouds of gas and dust, these galaxies are made of computer code and live in a virtual universe in the cloud.

The images are so realistic, Troxel says, that even experts can’t always tell at first glance whether they contain simulated telescope data or the real thing.

Achieving that degree of authenticity is a huge number-crunching task, Troxel says. It involved simulating the light of every star and galaxy and reconstructing the path it takes through space over billions of years to reach the telescopes.

Their solution was to use a supercomputer capable of running thousands of trillions of calculations a second. Using the now-retired Theta cluster at Argonne National Lab in Illinois, they were able to produce some four million simulated images of the cosmos, accomplishing in nine days what would have taken 300 years on a standard laptop.

The task took more than 55 million CPU hours and over half a year of work by dozens of experts to prepare, not to mention the coordination of some 1300 researchers across multiple cosmology teams, Troxel says.

For now, they have released a 10-terabyte subset of the complete 400-terabyte package, with the remaining data to follow this fall once they’ve been processed.

Researchers will use the simulated images to conduct a dress rehearsal; to test new methods and algorithms they will use once they get their hands on the real data in the future.

Working out bugs in simulation before they encounter them in real life will enable them to hit the ground running once the data start pouring in.

"It means that we'll be ready. We can do the science on day one."

Michael Troxel, associate professor of physics

Credit: This work was made possible thanks to OpenUniverse, the LSST Dark Energy Science Collaboration, RAPID Project Infrastructure Teams, the Roman High-Latitude Imaging Survey and the Supernova Cosmology Project.